1.The problem for contemporary blockchain information stack

There are a number of challenges {that a} fashionable blockchain indexing startup could face, together with:

- Large quantities of information. As the quantity of information on the blockchain will increase, the info index might want to scale as much as deal with the elevated load and supply environment friendly entry to the info. Consequently, it results in larger storage prices, gradual metrics calculation, and elevated load on the database server.

- Complicated information processing pipeline. Blockchain expertise is complicated, and constructing a complete and dependable information index requires a deep understanding of the underlying information constructions and algorithms. The range of blockchain implementations inherits it. Given particular examples, NFTs in Ethereum are normally created inside good contracts following the ERC721 and ERC1155 codecs. In distinction, the implementation of these on Polkadot, for example, is normally constructed straight inside blockchain runtime. These must be thought-about NFTs and must be saved as these.

- Integration capabilities. To supply most worth to customers, a blockchain indexing resolution could have to combine its information index with different methods, corresponding to analytics platforms or APIs. That is difficult and requires vital effort positioned into the structure design.

As blockchain expertise has turn out to be extra widespread, the quantity of information saved on the blockchain has elevated. It’s because extra individuals are utilizing the expertise, and every transaction provides new information to the blockchain. Moreover, blockchain expertise has advanced from easy money-transferring functions, corresponding to these involving the usage of Bitcoin, to extra complicated functions involving the implementation of enterprise logic inside good contracts. These good contracts can generate massive quantities of information, contributing to the elevated complexity and measurement of the blockchain. Over time, this has led to a bigger and extra complicated blockchain.

On this article, we evaluate the evolution of Footprint Analytics’ expertise structure in phases as a case examine to discover how the Iceberg-Trino expertise stack addresses the challenges of on-chain information.

Footprint Analytics has listed about 22 public blockchain information, and 17 NFT market, 1900 GameFi challenge, and over 100,000 NFT collections right into a semantic abstraction information layer. It’s essentially the most complete blockchain information warehouse resolution on the earth.

No matter blockchain information, which incorporates over 20 billions rows of data of economic transactions, which information analysts regularly question. it’s totally different from ingression logs in conventional information warehouses.

Now we have skilled 3 main upgrades up to now a number of months to satisfy the rising enterprise necessities:

2. Structure 1.0 Bigquery

Initially of Footprint Analytics, we used Google Bigquery as our storage and question engine; Bigquery is a superb product. It’s blazingly quick, simple to make use of, and gives dynamic arithmetic energy and a versatile UDF syntax that helps us shortly get the job completed.

Nevertheless, Bigquery additionally has a number of issues.

- Knowledge isn’t compressed, leading to excessive prices, particularly when storing uncooked information of over 22 blockchains of Footprint Analytics.

- Inadequate concurrency: Bigquery solely helps 100 simultaneous queries, which is unsuitable for top concurrency eventualities for Footprint Analytics when serving many analysts and customers.

- Lock in with Google Bigquery, which is a closed-source product。

So we determined to discover different various architectures.

3. Structure 2.0 OLAP

We have been very excited about a few of the OLAP merchandise which had turn out to be very talked-about. Essentially the most enticing benefit of OLAP is its question response time, which generally takes sub-seconds to return question outcomes for enormous quantities of information, and it might additionally help 1000’s of concurrent queries.

We picked the most effective OLAP databases, Doris, to provide it a strive. This engine performs effectively. Nevertheless, in some unspecified time in the future we quickly bumped into another points:

- Knowledge sorts corresponding to Array or JSON should not but supported (Nov, 2022). Arrays are a standard sort of information in some blockchains. As an example, the subject discipline in evm logs. Unable to compute on Array straight impacts our means to compute many enterprise metrics.

- Restricted help for DBT, and for merge statements. These are frequent necessities for information engineers for ETL/ELT eventualities the place we have to replace some newly listed information.

That being mentioned, we couldn’t use Doris for our complete information pipeline on manufacturing, so we tried to make use of Doris as an OLAP database to resolve a part of our downside within the information manufacturing pipeline, appearing as a question engine and offering quick and extremely concurrent question capabilities.

Sadly, we couldn’t exchange Bigquery with Doris, so we needed to periodically synchronize information from Bigquery to Doris utilizing it as a question engine. This synchronization course of had a number of points, certainly one of which was that the replace writes acquired piled up shortly when the OLAP engine was busy serving queries to the front-end shoppers. Subsequently, the velocity of the writing course of acquired affected, and synchronization took for much longer and typically even grew to become inconceivable to complete.

We realized that the OLAP might resolve a number of points we face and couldn’t turn out to be the turnkey resolution of Footprint Analytics, particularly for the info processing pipeline. Our downside is larger and extra complicated, and let’s imagine OLAP as a question engine alone was not sufficient for us.

4. Structure 3.0 Iceberg + Trino

Welcome to Footprint Analytics structure 3.0, a whole overhaul of the underlying structure. Now we have redesigned all the structure from the bottom as much as separate the storage, computation and question of information into three totally different items. Taking classes from the 2 earlier architectures of Footprint Analytics and studying from the expertise of different profitable large information initiatives like Uber, Netflix, and Databricks.

4.1. Introduction of the info lake

We first turned our consideration to information lake, a brand new sort of information storage for each structured and unstructured information. Knowledge lake is ideal for on-chain information storage because the codecs of on-chain information vary extensively from unstructured uncooked information to structured abstraction information Footprint Analytics is well-known for. We anticipated to make use of information lake to resolve the issue of information storage, and ideally it could additionally help mainstream compute engines corresponding to Spark and Flink, in order that it wouldn’t be a ache to combine with several types of processing engines as Footprint Analytics evolves.

Iceberg integrates very effectively with Spark, Flink, Trino and different computational engines, and we will select essentially the most applicable computation for every of our metrics. For instance:

- For these requiring complicated computational logic, Spark would be the selection.

- Flink for real-time computation.

- For easy ETL duties that may be carried out utilizing SQL, we use Trino.

4.2. Question engine

With Iceberg fixing the storage and computation issues, we had to consider selecting a question engine. There should not many choices obtainable. The alternate options we thought-about have been

An important factor we thought-about earlier than going deeper was that the long run question engine needed to be appropriate with our present structure.

- To help Bigquery as a Knowledge Supply

- To help DBT, on which we rely for a lot of metrics to be produced

- To help the BI device metabase

Primarily based on the above, we selected Trino, which has superb help for Iceberg and the group have been so responsive that we raised a bug, which was fastened the following day and launched to the most recent model the next week. This was the only option for the Footprint group, who additionally requires excessive implementation responsiveness.

4.3. Efficiency testing

As soon as we had selected our course, we did a efficiency check on the Trino + Iceberg mixture to see if it might meet our wants and to our shock, the queries have been extremely quick.

Realizing that Presto + Hive has been the worst comparator for years in all of the OLAP hype, the mix of Trino + Iceberg fully blew our minds.

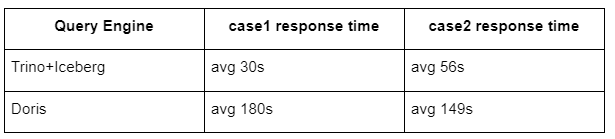

Listed below are the outcomes of our assessments.

case 1: be part of a big dataset

An 800 GB table1 joins one other 50 GB table2 and does complicated enterprise calculations

case2: use an enormous single desk to do a definite question

Check sql: choose distinct(deal with) from the desk group by day

The Trino+Iceberg mixture is about 3 instances sooner than Doris in the identical configuration.

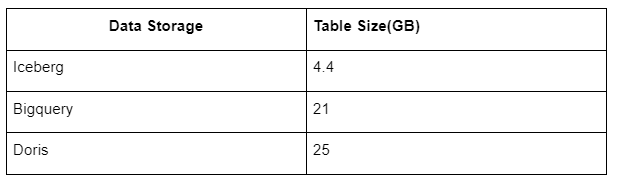

As well as, there may be one other shock as a result of Iceberg can use information codecs corresponding to Parquet, ORC, and so forth., which is able to compress and retailer the info. Iceberg’s desk storage takes solely about 1/5 of the area of different information warehouses The storage measurement of the identical desk within the three databases is as follows:

Be aware: The above assessments are examples we’ve got encountered in precise manufacturing and are for reference solely.

4.4. Improve impact

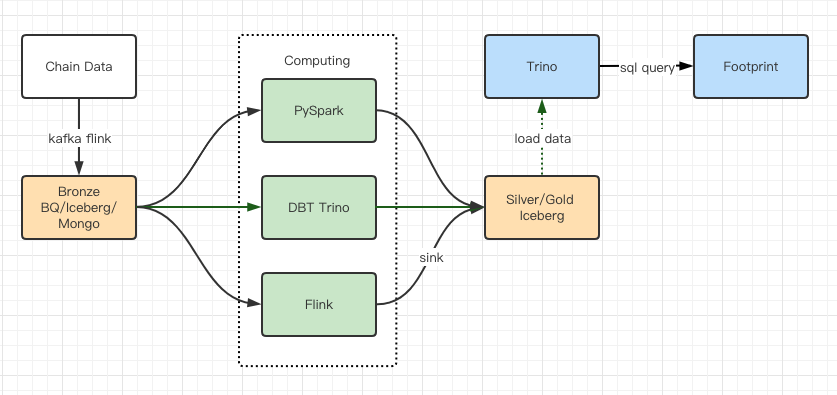

The efficiency check reviews gave us sufficient efficiency that it took our group about 2 months to finish the migration, and this can be a diagram of our structure after the improve.

- A number of laptop engines match our varied wants.

- Trino helps DBT, and may question Iceberg straight, so we not need to cope with information synchronization.

- The wonderful efficiency of Trino + Iceberg permits us to open up all Bronze information (uncooked information) to our customers.

5. Abstract

Since its launch in August 2021, Footprint Analytics group has accomplished three architectural upgrades in lower than a 12 months and a half, because of its robust want and willpower to carry the advantages of the very best database expertise to its crypto customers and strong execution on implementing and upgrading its underlying infrastructure and structure.

The Footprint Analytics structure improve 3.0 has purchased a brand new expertise to its customers, permitting customers from totally different backgrounds to get insights in additional numerous utilization and functions:

- Constructed with the Metabase BI device, Footprint facilitates analysts to realize entry to decoded on-chain information, discover with full freedom of selection of instruments (no-code or hardcord), question complete historical past, and cross-examine datasets, to get insights in no-time.

- Combine each on-chain and off-chain information to evaluation throughout web2 + web3;

- By constructing / question metrics on prime of Footprint’s enterprise abstraction, analysts or builders save time on 80% of repetitive information processing work and concentrate on significant metrics, analysis, and product options primarily based on their enterprise.

- Seamless expertise from Footprint Internet to REST API calls, all primarily based on SQL

- Actual-time alerts and actionable notifications on key indicators to help funding choices